In today's data-driven world, the ability to extract and analyze data from websites is crucial for businesses and developers seeking valuable insights. Web scraping, particularly using Application Programming Interfaces (APIs), has become a popular method for extracting data efficiently and ethically.

In this article, we will explore the best practices for maximizing data extraction using website APIs.

Understand the API Documentation: Before scraping any website using its API, it is essential to thoroughly read and understand the API documentation. This documentation provides valuable information on how to authenticate requests, the rate limits, and the data format returned by the API.

Respect Robots.txt: Robots.txt is a file that specifies which parts of a website can be crawled by bots and which parts should be avoided. Always respect the rules set in the Robots.txt file to avoid legal issues and maintain a good relationship with the website owner.

Use Rate Limiting: Most APIs have rate limits to prevent abuse and ensure fair usage. Always adhere to these rate limits to avoid being blocked by the API provider. Implementing rate limiting on your end can also help prevent accidental overloading of the API.

Use Efficient Scraping Techniques: When scraping data from websites, use efficient techniques to minimize the number of requests and maximize the amount of data retrieved in each request. For example, use batch requests to retrieve multiple items in a single request instead of making individual requests for each item.

Handle Errors Gracefully: When scraping data from websites, errors can occur due to various reasons such as network issues or server errors. It is essential to handle these errors gracefully by implementing retry mechanisms and logging errors for later analysis.

Monitor API Usage: Keep track of your API usage to ensure you are not exceeding the limits set by the API provider. Monitoring API usage can also help you identify any issues with your scraping process and optimize it for better performance.

Respect Copyright and Privacy Laws: When scraping data from websites, always respect copyright and privacy laws. Ensure that the data you are scraping is not protected by copyright and that you have the necessary permissions to use it.

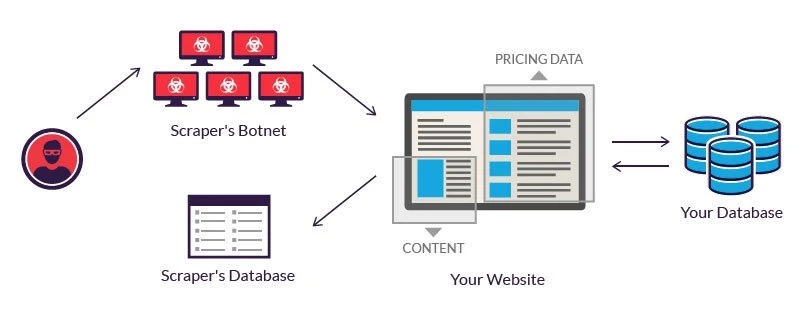

Use API Proxies: To avoid being blocked by websites, consider using API proxies. API proxies can help you rotate your IP address, making it difficult for websites to detect and block your scraping activities.

In conclusion, web scraping using scrape data API can be a powerful tool for extracting data from websites. By following these best practices, you can maximize your data extraction efforts while ensuring you stay within legal and ethical boundaries.