Generative AI has moved quickly from experimentation to everyday use. It now powers chat interfaces, content tools, recommendation systems, and even software development workflows. As these systems become more capable, they also introduce new security risks that traditional approaches were never designed to handle.

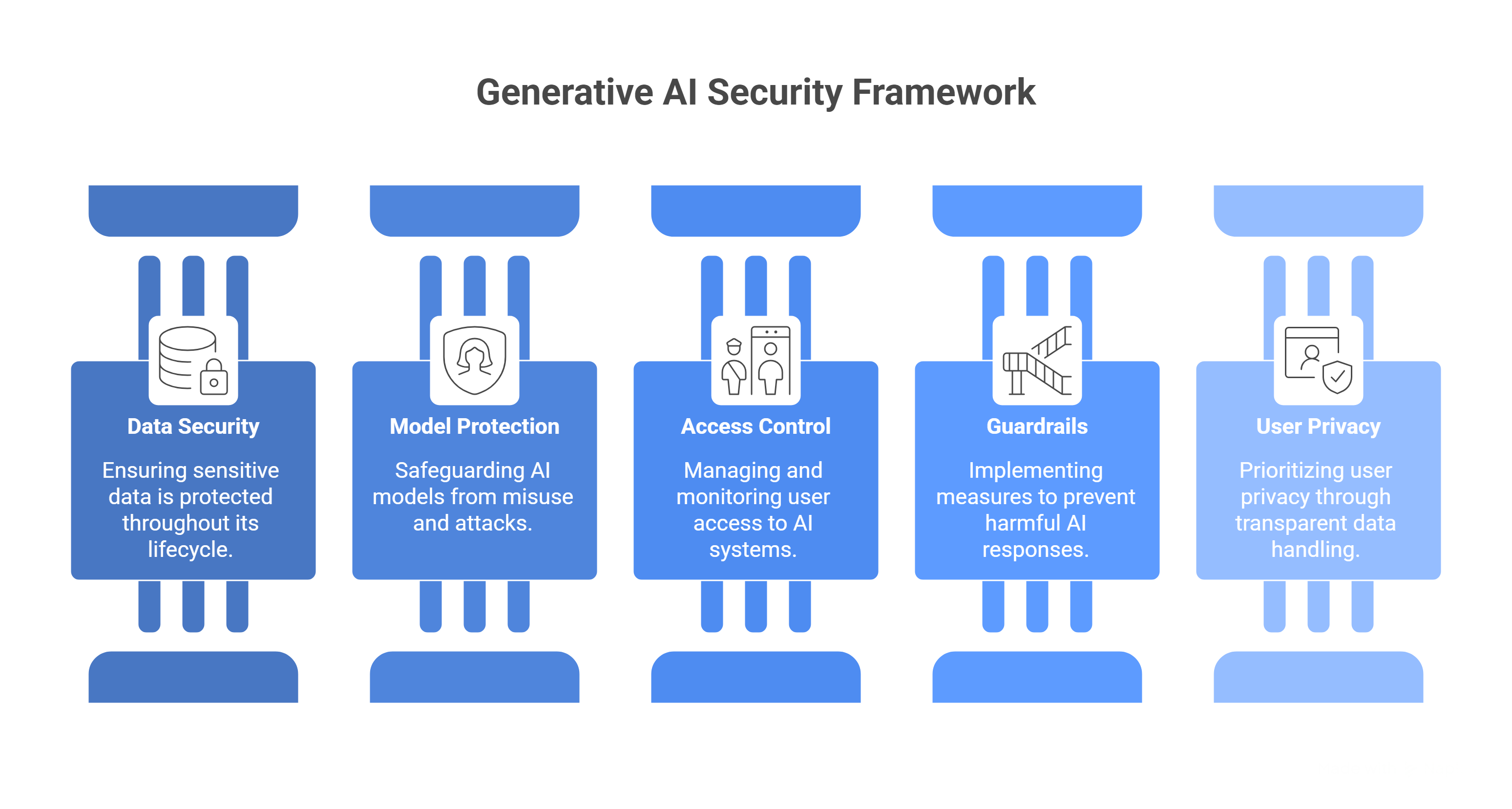

Securing generative AI is not just about protecting servers or writing safer code. It is about safeguarding data, defending AI models from misuse, and ensuring users are not exposed to harm. This guide explains how to approach security in the generative AI era with practical, real-world strategies.

Why Generative AI Requires a New Security Mindset

Traditional applications behave in predictable ways. Generative AI does not. It learns from data, adapts to inputs, and produces outputs that can vary widely based on context.

This creates several challenges:

- Sensitive data may appear in training sets or prompts

- Models can be manipulated through carefully crafted inputs

- Users may trust AI outputs more than they should

Security in this space must account for human behavior, model behavior, and data flows all at once.

Protecting Data Across the AI Lifecycle

1. Secure Data Collection and Storage

Data is the foundation of any generative AI system. If data is exposed or misused, the entire system becomes a liability.

Key practices include:

- Encrypting data at rest and in transit

- Limiting access using strict role-based permissions

- Removing or anonymizing personal identifiers

- Avoiding unnecessary data retention

These steps are especially important when datasets are shared across teams or environments during development and testing.

2. Reduce Risk During Model Training

Training data often includes internal documents, user inputs, or logs. Without proper controls, this data can leak into model outputs.

Teams working on advanced AI systems, including those delivering AI ML Development Services, often reduce this risk by curating datasets carefully and isolating sensitive information before training begins.

Securing AI Models From Abuse and Theft

1. Control Access to Model Endpoints

AI models should never be treated as open resources by default. Unauthorized access can lead to misuse, data extraction, or denial-of-service attacks.

Effective controls include:

- Authentication and authorization for all model requests

- Rate limiting to prevent automated abuse

- Monitoring for unusual usage patterns

Endpoints should be treated with the same level of protection as any critical API.

2. Prevent Prompt Injection and Manipulation

Prompt injection is one of the most common security threats in generative AI systems. Attackers attempt to override system instructions or extract sensitive information through cleverly designed inputs.

Mitigation strategies include:

- Separating system prompts from user inputs

- Validating and filtering prompts

- Applying output constraints to sensitive responses

These measures help maintain predictable and safe model behavior.

3. Protect the Model as an Asset

Trained models represent significant investment and intellectual value. If leaked, they can be copied or reverse-engineered.

Best practices include secure model storage, restricted export permissions, and deployment environments that limit direct access to model files.

Keeping Users Safe in AI-Driven Products

1. Prevent Harmful or Misleading Outputs

Generative AI can produce incorrect, biased, or unsafe content. This becomes a security issue when users rely on outputs for decisions or actions.

To protect users:

- Apply moderation filters and safety rules

- Test models for edge cases and misuse scenarios

- Clearly communicate limitations to users

User trust depends on transparency as much as technical safeguards.

2. Protect User Privacy During Interactions

Many users share personal or sensitive information during AI interactions, often without realizing it.

Privacy-focused design includes:

- Not using live user inputs for training without consent

- Defining clear data retention policies

- Giving users control over their data

This approach is particularly important for consumer-facing applications built by an app development company australia, where privacy expectations and regulatory standards are high.

Governance and Team-Level Security Practices

1. Establish Clear AI Governance

Security cannot be an afterthought. It needs ownership, policies, and accountability.

Strong governance frameworks define:

- Who is responsible for AI systems

- How data and models are approved and updated

- When security reviews are required

This becomes even more important when development is distributed.

2. Secure Collaboration in Distributed Teams

Many AI products are built by globally distributed teams. Working with a Remote Software Development Team can increase productivity, but it also requires clear security standards, controlled access, and consistent processes to prevent accidental exposure.

Shared documentation, secure tooling, and regular reviews help maintain alignment.

Build Security Into the Development Process

The most effective GenAI security strategies are built into the development lifecycle, not added at the end.

This includes:

- Reviewing datasets before training

- Testing models against misuse scenarios

- Monitoring outputs after deployment

Security is an ongoing process that evolves as models and user behavior change.

Final Thoughts

Generative AI brings enormous potential, but it also changes what security means in practice. Protecting data, securing AI models, and keeping users safe requires a broader and more thoughtful approach than traditional application security.

When teams treat AI security as part of product quality rather than a technical checkbox, they build systems that users can trust. In the generative AI era, that trust is just as important as innovation itself.