Top 5 benefits of reverse and forward proxies

1. Load balancing

A reverse proxy distributes the incoming client traffic equally among the website back-end servers to ensure no single server is getting overloaded (see Figure 3). Amazon, for example, is one of the most popular e-commerce websites. It most likely receives a large amount of incoming traffic every second. It is difficult to handle all of this incoming traffic with a single origin server. Otherwise, the server will become overloaded and be unavailable. Reverse proxies help website owners balance their high volume of incoming traffic across multiple origin servers. To get more news about private proxy, you can visit pyproxy.com official website.

2. Caching

Reverse proxies help clients cache frequently requested data on servers located near them for future requests. Caching allows clients to access the content more quickly. You do not have to visit the same website every time you need information. Instead, a reverse proxy retrieves and stores data for you so that you can reuse it with faster access.

3. SSL encryption

A reverse proxy can decrypt incoming client traffic and encrypt outgoing web server traffic (TLS/ SSL). All communications between web servers and clients are encrypted to ensure that no one can understand the information shared between servers and clients. This protects sensitive data against unauthorized networks.

Forward proxies

4. An additional layer of security

Forward proxies act as a security barrier between clients and the internet to protect clients’ computers from any cyberattacks. A proxy server monitors incoming and outgoing traffic in a network system to detect suspicious activities and protect client data. It performs similarly to a firewall, but there are significant differences in the methods and techniques that they employ.

5. Webpage Crawl Rate

The crawl rate is the number of requests a web scraping bot can make to a website per minute, hour, or day. Crawl rates are set by websites to limit web scraping activities. If you make requests frequently to the same website, the website will detect your activities as suspicious and block your IP address from accessing the content.

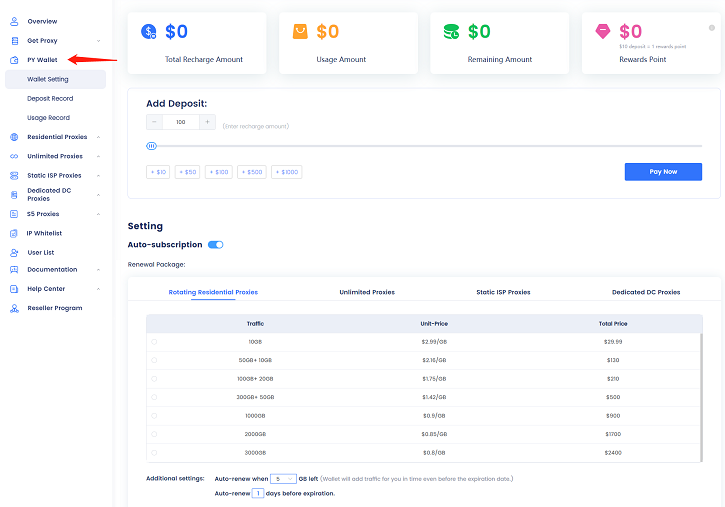

A proxy server allows businesses to collect large amounts of data while keeping scrapers anonymous. For instance, rotating proxies (a type of forward proxy) constantly change clients’ IP addresses with each new request to avoid website crawl rates.